Toyota Research Institute (TRI) announced a breakthrough generative AI approach based on Diffusion Policy to quickly and confidently teach robots new, dexterous skills. According to the researchers, this advance significantly improves the utility of robots and is a step toward building" Large Behavior Models (LBMs) for robots, analogous to the Large Language Models (LLMs) that have recently revolutionized conversational AI.

“Our research in robotics is aimed at amplifying people rather than replacing them,” said Gill Pratt, CEO of TRI and Chief Scientist for Toyota Motor Corporation. “This new teaching technique is both very efficient and produces very high performing behaviors, enabling robots to much more effectively amplify people in many ways.”

New Generative AI Technique Brings Researchers One Step Closer to Building a “Large Behavior Model”

Previous state-of-the-art techniques to teach robots new behaviors were slow, inconsistent, inefficient, and often limited to narrowly defined tasks performed in highly constrained environments. Roboticists needed to spend many hours writing sophisticated code and/or using numerous trial and error cycles to program behaviors.

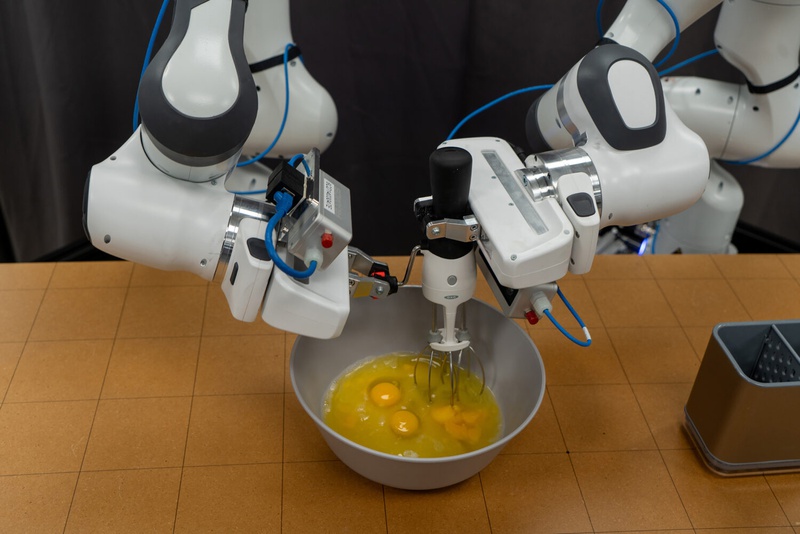

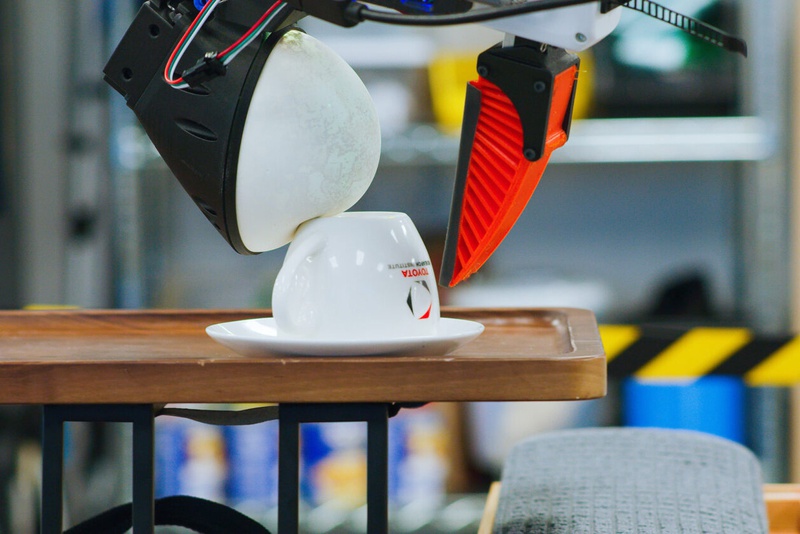

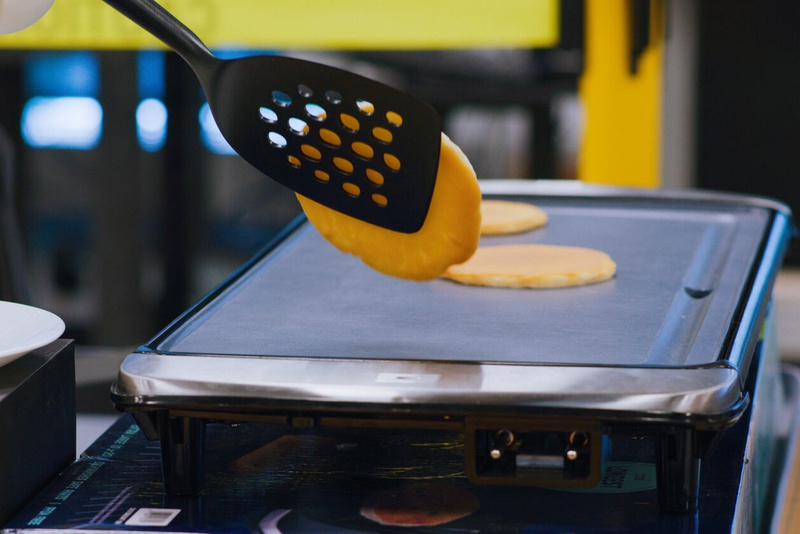

TRI has already taught robots more than 60 difficult, dexterous skills using the new approach, including pouring liquids, using tools, and manipulating deformable objects. These achievements were realized without writing a single line of new code; the only change was supplying the robot with new data. Building on this success, TRI has set an ambitious target of teaching hundreds of new skills by the end of the year and 1,000 by the end of 2024.

Today's news also highlights that robots can be taught to function in new scenarios and perform a wide range of behaviors. These capabilities are not limited to "pick and place" or simply picking up objects and placing them in new locations. TRI's robots can now interact with the world in diverse and rich ways - someday enabling robots to assist humans in everyday situations and unpredictable, changing environments.

“The tasks that I’m watching these robots perform are simply amazing – even one year ago, I would not have predicted that we were close to this level of diverse dexterity,” remarked Russ Tedrake, Vice President of Robotics Research at TRI. Dr. Tedrake, who is also the Toyota Professor of Electrical Engineering and Computer Science, Aeronautics and Astronautics, and Mechanical Engineering at MIT, explained, “What is so exciting about this new approach is the rate and reliability with which we can add new skills. Because these skills work directly from camera images and tactile sensing, using only learned representations, they are able to perform well even on tasks that involve deformable objects, cloth, and liquids — all of which have traditionally been extremely difficult for robots.”

In more technical terms, TRI's robot behavior model learns from haptic demonstrations from a teacher, combined with a linguistic description of the goal. It then uses an AI-based diffusion policy to learn the demonstrated skill. This process allows a new behavior to be deployed autonomously from dozens of demonstrations. Not only does this approach produce consistent, repeatable, and performant results, it does so at incredible speed.

Source: Toyota